Keyword [Stochastic Depth] [EfficientNet]

Xie Q, Hovy E, Luong M T, et al. Self-training with Noisy Student improves ImageNet classification[J]. arXiv preprint arXiv:1911.04252, 2019.

1. Overview

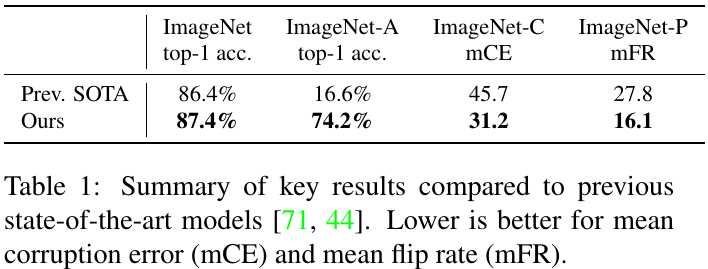

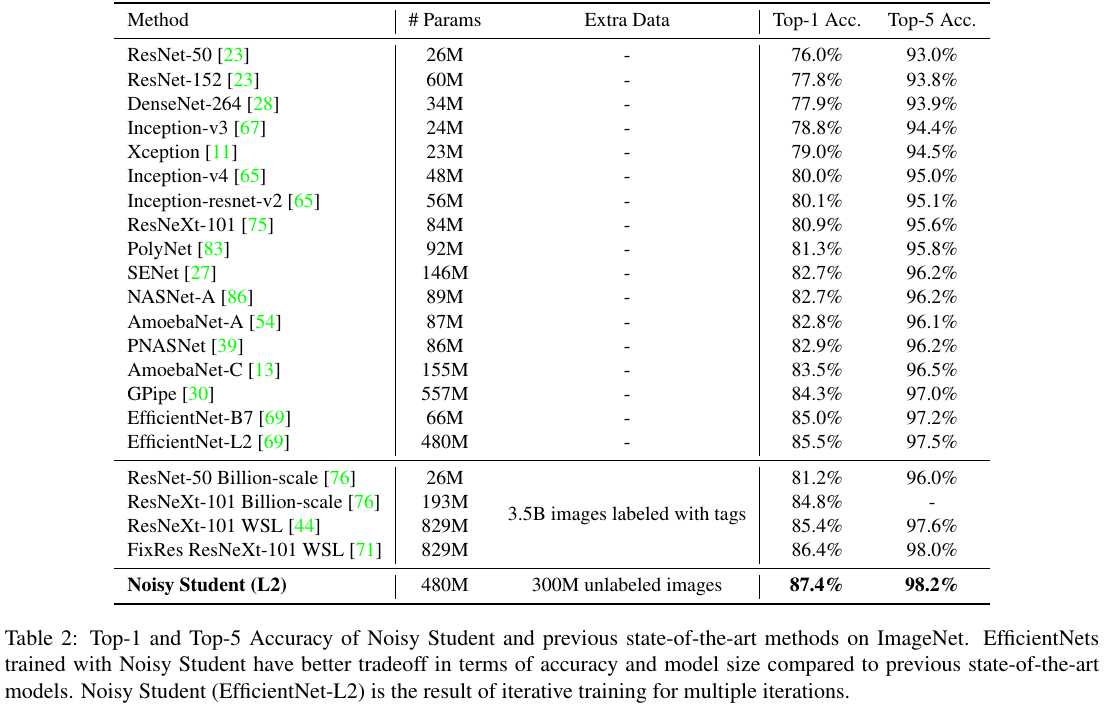

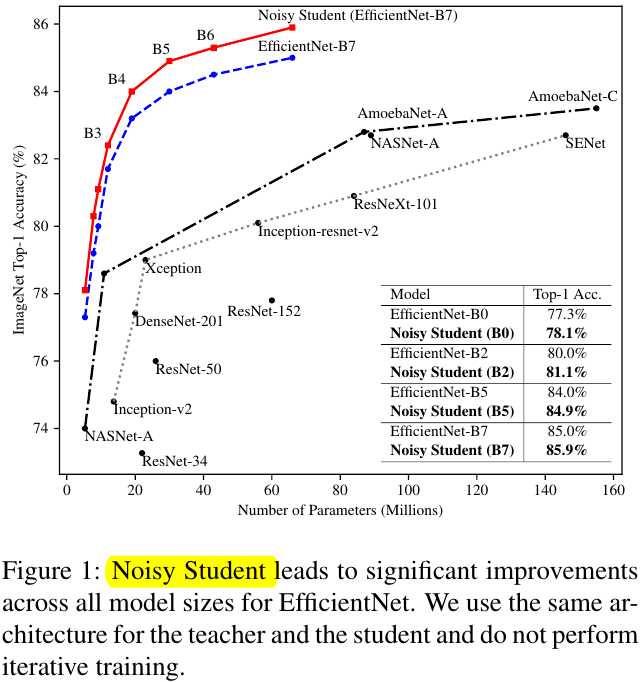

In this paper, it proposes a self-training methods to improve 1% top-1 accuracy on ImageNet.

- Teacher-Student based on EfficientNet.

- Noise Student during training, while not noise Teacher during generation of pseudo labels.

- To noise Student, use dropout, data augmentation and stochastic dropout.

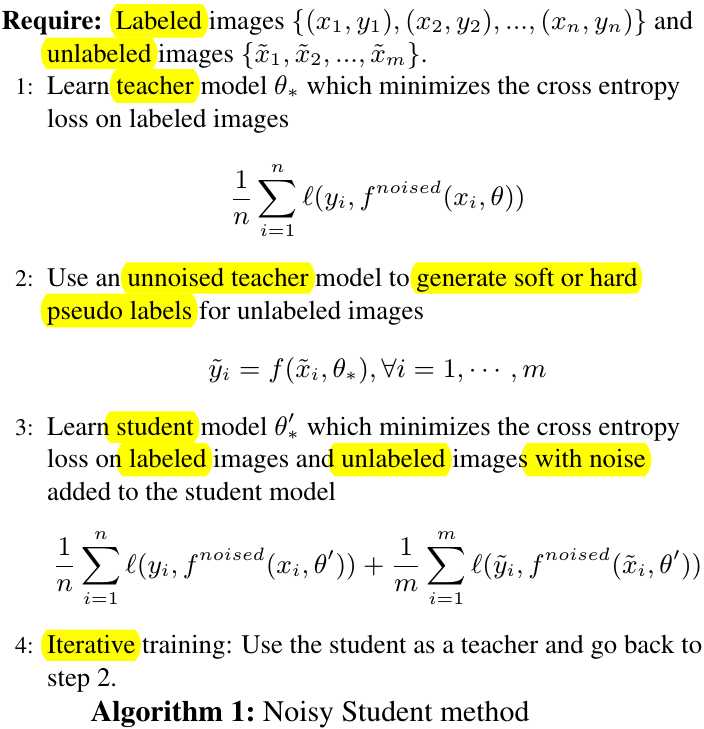

2. Algorithm

1) Noise Student is trained to be consistent to the more powerful teacher model that is not noised when it generates pseudo labels.

2) When dropout and stochastic depth are used, Teacher (no drop when generate pseudo labels) behaves like an ensemble models.

3) Student needs to be sufficiently large to fit more data.

4) Data Balanced of Unlabeled data (duplicate and highest confidence)

5) Soft pseudo labels are more stable and faster convergence than hard (one-hot).

3. Experiments

3.1. Details

1) Use JFT dataset as unlabeled data.

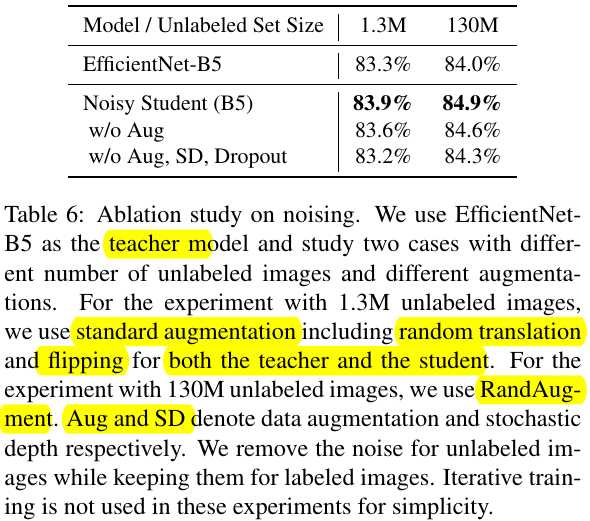

2) Stochastic Depth. Set survival probability to 0.8 for the final layer and follow the linear decau rule for other layers.

3) Dropout. 0.5 for final cls layer.

4) RandAugment. two random operations with the magnitude set to 27

3.2. Noise Student

1) larger model +0.5%.

2) Noise Student + 1.9%.

3.3. Adversarial Attack

3.4. Ablation Study